In the rapidly evolving world of Generative AI, we often think of “Agents” as specialized workers. You have a Coding Agent, a Writing Agent, or a Data Analysis Agent. But what happens when you have a task that doesn’t fit neatly into one of these boxes? Or what if you don’t know which agent you need?

Enter the Abstract AI Agent—a conceptual leap in how we interact with Large Language Models (LLMs). Instead of being hard-coded for a single job, an Abstract Agent is a shapeshifter. It doesn’t just do the work; it first decides how the work should be done by building a custom prompt for itself for specific tasks.

I recently explored an implementation of this concept in the Abstract Agent AI (built by myself and Google’s AI Studio, and it offers a fascinating glimpse into the future of adaptive AI.

The Problem: “One Prompt Fits All” doesn’t work.

Most AI applications today rely on static system instructions. A developer writes a “System Prompt” (e.g., “You are a helpful assistant…”), and that prompt governs every interaction.

While this works for general tasks, it fails at nuance. If you ask a general assistant to “debug this Python code,” it might give you a generic explanation. If you ask a Senior Software Engineer persona the same question, you get a deep, architectural fix. The quality of the output depends entirely on the specificity of the prompt.

But users shouldn’t have to be “Prompt Engineers” to get the best results.

The Solution: The Abstract Agent

The “Abstract Agent” flips the script. It is an agent designed with one primary skill: Metacognition (thinking about thinking).

Instead of rushing to answer your question, an Abstract Agent acts as a middleman between you and the raw intelligence of the LLM. Here is the workflow:

- Input Analysis: You give the agent a raw, perhaps vague, objective (e.g., “Help me plan a marketing launch for a coffee brand”).

- Dynamic Prompt Construction: The Abstract Agent doesn’t generate the marketing plan yet. Instead, it uses an internal “Builder” process to ask:

- What allows for the best success here?

- What persona is needed? (e.g., A Chief Marketing Officer with 10 years of experience).

- What are the constraints? (e.g., Limited budget, focus on social media).

- Instantiation: It generates a Custom Prompt—a strict, highly optimized set of instructions tailored exactly to your request.

- Execution: A new, temporary agent is spun up using this custom prompt to execute the task.

Inside the “Abstract Agent AI”

The GitHub repository demonstrates this “Agentic Prompt Building” in action. Built using React, TypeScript, and the Google Gemini API (though I made it no longer restricted to just Google Gemini), the app serves as a playground for this architecture.

Key Features of the Concept:

- Prompt-as-Code: The system treats prompts not as static text strings, but as dynamic objects that can be generated, refined, and executed programmatically.

- Gemini Integration: By leveraging the massive context window and reasoning capabilities of models like Gemini, the Abstract Agent can handle complex “meta-prompts” (prompts that generate other prompts) without losing coherence.

- Works Locally: System prompts are even more important with less intelligent local models running on consumer hardware, so you get more value from your local models (at a cost of context).

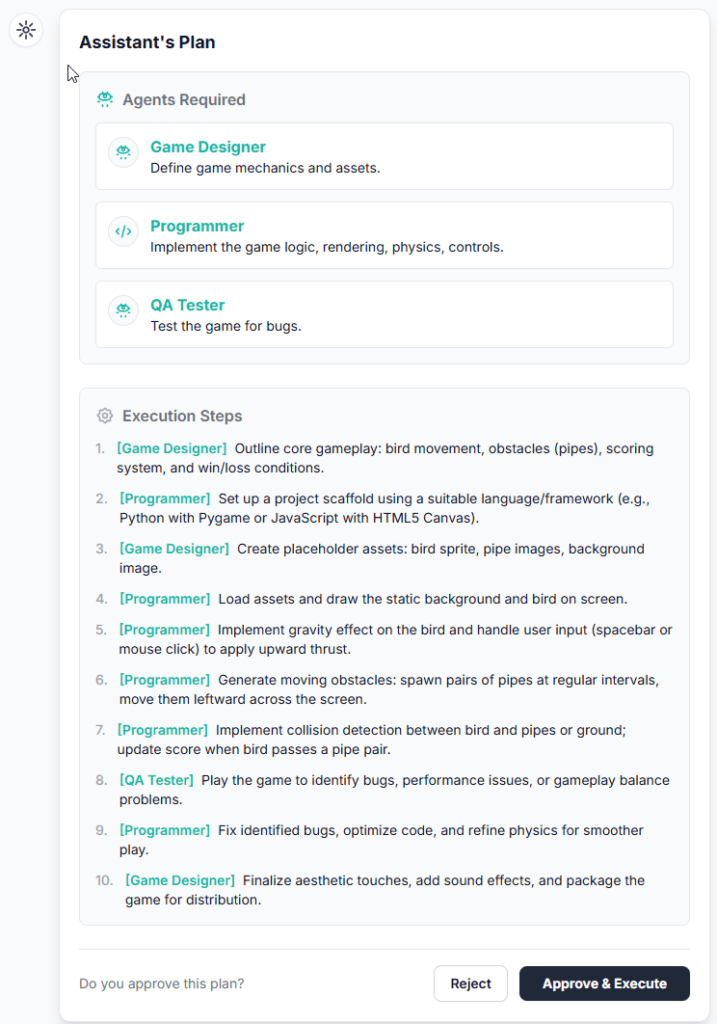

- Planning: Each request is broken down into “prompt” in the form of an agent and “tasks” so a full plan and what type of agent is needed is pre-planned and then executed.

Why This Matters

This “Abstract” approach shifts the burden of complexity from the user to the AI.

- For Developers: You don’t need to build 50 different “specialized” bots. You build one Abstract Agent that can specialize itself on the fly.

- For Users: You get expert-level outputs without needing to know expert-level terminology. You simply state your goal, and the AI figures out the best way to achieve it.

Conclusion

The Abstract Agent represents the next logical step in AI orchestration. We are moving away from static chatbots toward adaptive intelligence—systems that mold themselves to fit the task at hand.

If you are a developer looking to build smarter AI tools, check out the Abstract Agent AI. It’s a clean, open-source example of how to build an AI that adapts to the given request.

P.S. It works with local models too. You can configure this in the settings.

Observations

- The given model matters because the model needs to know how to accomplish and plan the task. It also needs to know what type of person is needed to accomplish that task.

- Most frontier models can work with this pretty well

- Open-source models struggle a bit

- GPT-OSS-20B works very well most of the time but struggles with tool use

- Qwen models struggle until a larger model is used (the parsing doesn’t support Qwen style text parsing very well either)

- Gemma struggles sometimes too

It would be interesting if somebody could build a benchmark around this to test how well models adapt to given tasks and run to a full completion around this adaptive agentic model benchmark.

Leave a Reply